I'm working with eye movements since 2004, when together with prof. Ober I proposed the idea of identifying people using their eye movements characteristics. I had a break in 2008-2012 when I was a Dean of Computer Science Faculty in Siesian Higher School of Computer Science but since 2012 I am back on track!

We work with various problems connected with eye tracking:

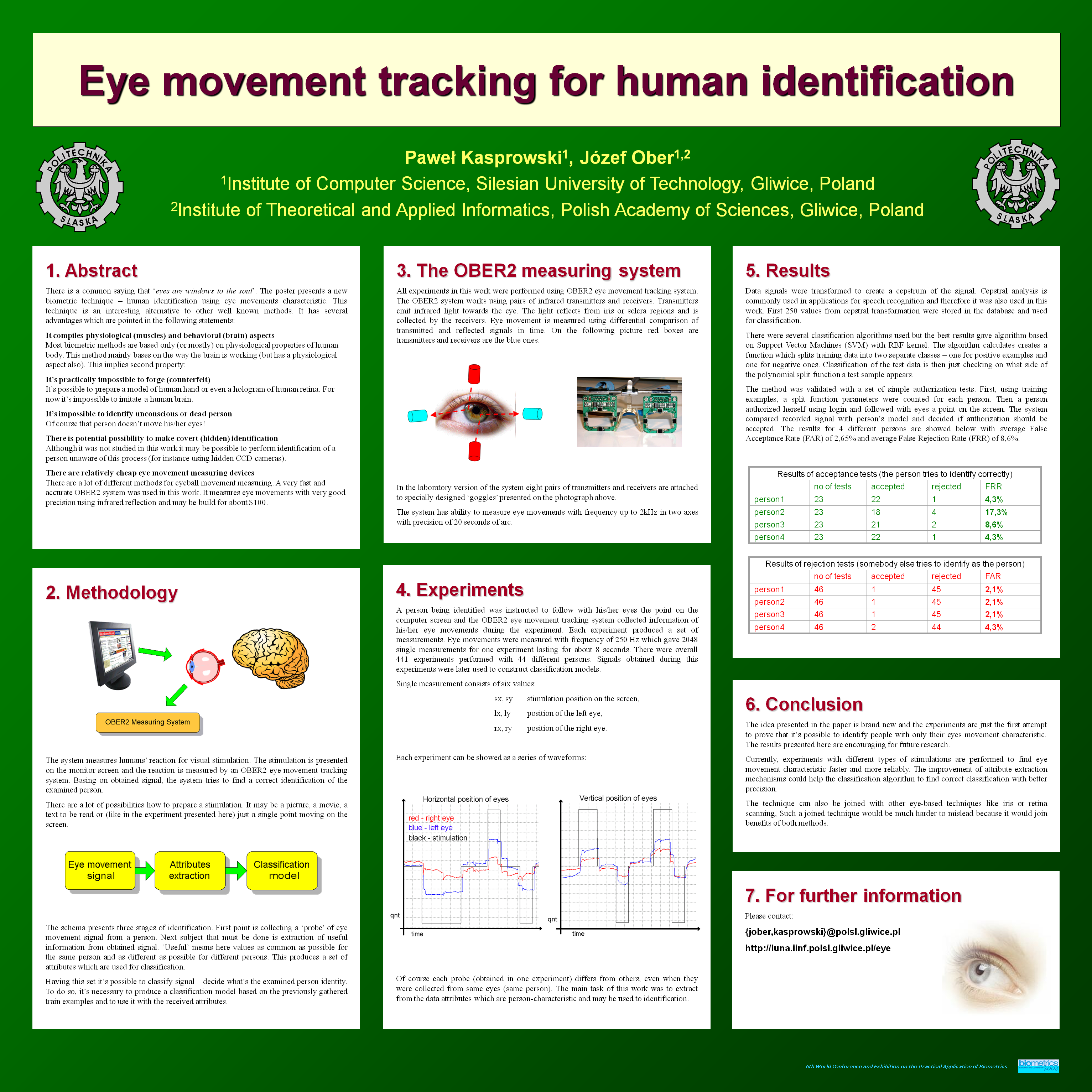

The idea that eye movement characteristics may reveal the person's identity was presented for the first time in London during the VII World Conference and Exhibition on Practical Application of Biometrics BIOMETRICS'2003 on the poster entitled [Eye movement tracking for human identification]. The poster achieved the Best Poster on Technological Advancement prize.

The original idea was descibed in 2004 in the paper [Eye Movements in Biometrics]. In 2005 it was further extended in [Enhancing eye-movement-based biometric identification method by using voting classifiers]. You may also be interested in my PHD: [Human identification using eye movements] but please cite the papers instead :)!

For some general information about the issue you can also look at my presentation performed during Biometrics 2012 Conference in London entitled Eye Movements Biometrics - Where you look shows who you are.

In 2012, together with Oleg Komogortsev from Texas State University, I

organized The First Eye Movement Verification and Identification

Competition (EMVIC 2012). Details of the competition may be found in

the paper:

[First Eye Movement Verification and Identification Competition at

BTAS 2012] and on the competition's web page. There were

four datasets published and participants uploaded their results through

competition's web page and kaggle.com.

In 2012, together with Oleg Komogortsev from Texas State University, I

organized The First Eye Movement Verification and Identification

Competition (EMVIC 2012). Details of the competition may be found in

the paper:

[First Eye Movement Verification and Identification Competition at

BTAS 2012] and on the competition's web page. There were

four datasets published and participants uploaded their results through

competition's web page and kaggle.com.

In 2014 we organized (together with Katarzyna Harezlak) the next edition of the competition (EMVIC 2014). Thanks to our sponsor SensoMotoric Instruments we were able to give the SMI RED 250 eye tracker to the winner: Vinnie Monaco. The competition and its results are described in the paper [The Second Eye Movement Verification and Identification Competition (EMVIC)]

Preparation of datasets for this competitions was a great opportunity to learn what should be avoided when preparing data for behavioral biometrics experiments. Our experience shows that it is very important to maintain a proper time interval between subsequent samples of the same subject. It occurred that the samples taken in very short intervals (e.g. 10 minutes) tend to be artificially similar - probably due to similar mood or mental and physical state of the person. Therefore, recognition results are - artificially - better. See our general paper about behavioral biometrics [The influence of dataset quality on the results of behavioral biometric experiments] or the more specific paper about eye movement biometrics [The Impact of Temporal Proximity between Samples on Eye Movement Biometric Identification] for details.

In 2015 Oleg Komogortsev and Ioannis Rigas (one of the winners of EMVIC 2012) organized their own eye movement biometrics competition called BioEye 2015. I took part in this competition and took a third place. You can find some details of my method in our paper [Using Dissimilarity Matrix for Eye Movement Biometrics with a Jumping Point Experiment] . We also tried other methods what is described in the paper [Application of Dimensionality Reduction Methods for Eye Movement Data Classification]

In 2016 we published a paper in which we used a fusion of two modalities: mouse dynamics and eye movement to correctly identify people. The paper entitled [Fusion of eye movement and mouse dynamics for reliable behavioral biometrics] was published in Pattern Analysis and Applications journal.

Most devices registering eye movements, called eye trackers, return information about relative position of an eye, without information about the gaze point. To obtain this information it is necessary to build a function that maps an output from the eye tracker to horizontal and vertical coordinates of a gaze point. It is typically done using information about eyes position when an examined person is looking at a set of predefined points (called Points of Regard of PoRs). There are several problems that must be addressed when preparing the calibration scenario:

All these problems were discussed in our publications.

In [Towards Accurate Eye Tracker Calibration - Methods and Procedures] we checked the correlation between calibration results and:

In [Guidelines for eye tracker calibration using points of regard] we checked various ways to build the calibration function. This function is in fact a regression function that maps eye positions to PORs. We tried 3 different functions: polynomial, ANN and SVR.

In [Idiosyncratic repeatability of calibration errors during eye tracker calibration] we checked if calibration results are repeatable for participants i.e. if the same participant has similar error in subsequent calibrations. It occurred that it is true, but only to some extent.

As the classic calibration with points of regard are a bit unnatural for users, we also tried alterative scenarios. In the paper [Study on Participant-controlled Eye Tracker Calibration Procedure] we proposed calibration scenario that involves interaction with users. The users had to click points of regard and observe an arrow displayed on the screen. The experimental part showed that calibration results for this scenario are better than for the classic one, but the main drawback is that it lasts longer.

We also tried to check if the calibration is necessary when only a simple task like PIN entering is preformed. Our experience has been described in the paper [Using Non-Calibrated Eye Movement Data To Enhance Human Computer Interfaces]

We still search for the ways to omit the calibration at all. In the paper [Implicit Calibration Using Predicted Gaze Targets] presented during ETRA 2016 we proposed an algorithm that automatically calibrates eye movement signal using probable gaze locations during normal users activities.

It seems that it would be easy to use gaze pointing as a replacement for other traditional human computer interaction modalities like e.g. mouse or trackball, especially when there are more and more affordable eye trackers available. However, it occurs that gaze contingent interfaces are often experienced as difficult and tedious by users. There are multiple reasons of these difficulties. First of all eye tracking requires prior calibration, which is unnatural for users. Secondly, gaze continent interfaces suffer from a so called Midas Touch problem, because it is difficult to detect a moment when a user wants to click a button or any other object on a screen. Eye pointing is also not as precise and accurate as e.g. mouse pointing.

In the paper [Eye Movement Tracking as a New Promising Modality for Human Computer Interaction] we addressed these problems and presented the results of an experiment that compares three modalities: mouse, touchpad and gaze in a simple shooting game.

In the paper [Cheap and easy PIN entering using eye gaze] we studied possibilities to use eye tracking for PIN entering (e.g. in ATM). The main drawback of the presented method was necessity to calibrate every user prior to PIN entering.

Therefore, in the paper [Using Non-Calibrated Eye Movement Data To Enhance Human Computer Interfaces] we checked if uncalibrated data may be used to enter simple information and it occurred that it is possible.

Eye tracking has many applications in medicine. To name a few, it may be used during diagnosing and therapy of:

During our research we focused on helping children with various disabilities. For now we published two papers about it. The first one [Application of Eye Tracking to Support Children's Vision Enhancing Exercises] shows that it is possible to make vision enhancing exercises fun for children with the usage of an eye tracker that is able to change boring exercises to interesting and interactive games.

We also try to help children with severe disabilities (like CVI). It is a difficult problem, our first experience was published in the paper [Application of Eye Tracking for Diagnosis and Therapy of Children with Brain Disabilities]

In the paper [Utilizing Gaze Self Similarity Plots to Recognize Dyslexia when_Reading] we showed that Gaze Self Similarity Plots are very useful to diangose dyslexia in adults.

Additionally, together with Robert Koprowski and Marek Rzendkowski we created a new type of automated, computerized perimeter designed to test the visual field in children and adults. The device was described in the paper entitled [Simplified automatic method for measuring the visual field using the perimeter ZERK 1].

Eye movment signal is traditionally divided into fixations and saccades. Many research analyzes fixation's and saccade's characteristics.

Therefore, it is very important to precisely separate fixations and saccades.

It is a very insteresting and challenging topic with many publications.

We have published only one paper in which we analysed how parameters of fixation detection algorithm influence the final features.

If you are interested in this subject please take a look at

[Evaluating Quality of Dispersion Based Fixation Detection

Algorithm]

In [Review and Evaluation of Eye Movement Event Detection Algorithms] we checked different algorithms used for this issue.

The Big Data problem influences eye tracking as well. We heve more and more eye tracking data to analyze and its proper storage becomes a challenging task. We adressed this problem in our publications. In [The Eye Movement Data Storage - Checking the Possibilities] we checked efficiency of various possible data storages in the context of eye movement storing and querying. In [Disk Space and Load Time Requirements for Eye Movement Biometric Databases] we focused on eye movement databases for biometric identification purposes.

We constantly try to explore new applications of eye tracking.

In the paper [The Eye Tracking Methods in User Interfaces Assessment] we analyzed how eye tracking may be used to evaluate user interfaces and proposed some guidelines.

In the paper [Mining of Eye Movement Data to Discover People Intentions] we tried to guess if a person was lying by analyzing the person's eye movement.